Crate.io focuses on database simplicity and horizontal scalability. We aim to make scaling your data from millions to billions of records and beyond as simple as possible.

We have clients who write and query gigabytes of data a minute, store terabytes a day and love the performance and stability that Crate offers. But the question always remained at the back of our minds - when taken to the extreme, what is Crate really capable of?

We specifically wanted to:

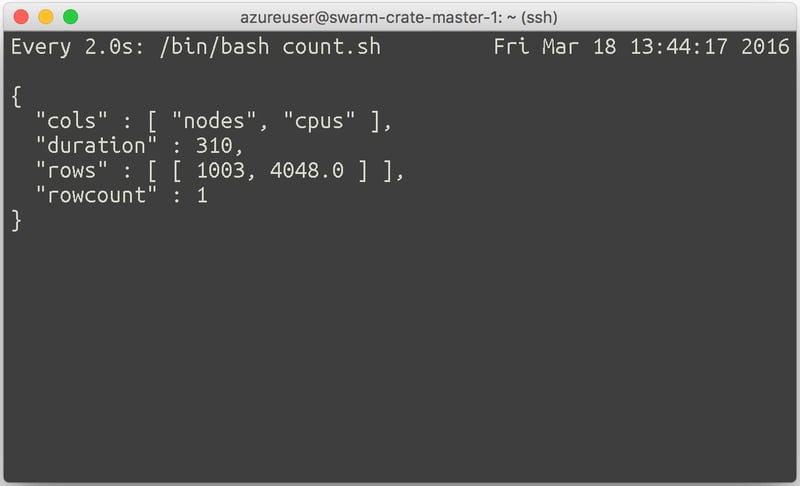

- Deploy a Crate cluster of 1001 nodes.

- Examine the overhead added by cluster management.

- See how fats the cluster could write data.

- Assess the performance of different cluster configurations.

Microsoft Azure generously lent us the use of 8000 cores as the infrastructure for this test and engineer time to guide us through problems and questions we might have. Microsoft wanted to show that their Azure platform was reliable and capable enough for a project that would scale rapidly and is so resource intensive.

The Plan

While discussing the approach of setting up a 1001 node Crate cluster, we immediately thought about using Docker Swarm to launch Crate. However, we also wanted to see how the conventional deployment, using regular packages, would work.

Docker Swarm Setup

Our approach was to start the Crate cluster in a containerized environment using Docker v1.10.3, Docker Swarm v1.0.1 for scheduling Crate instances and Consul v0.5.2 as a backend for node discovery in a Swarm cluster.

The solution had its pros, such as scheduling Crate nodes, the ability to change settings with minimal effort (to repeat certain experiments). It also had its cons, adding complexity to the overhead in the Crate cluster setup. For example, the Consul and Swarm clusters must be deployed first and result in higher network load and additional connections.

Conventional Crate Setup

For these reasons, we wanted to be certain that the high network traffic was not due to Crate, so our next plan was to go back to basics and eliminate the potential impact caused by other underlying clusters.

We decided to use the Crate Ubuntu package to setup the cluster and take the simplest approach for spinning up the cluster.

Azure Setup

We wanted to create a 1001 node Crate cluster ready for data import and manipulation. Setting up such a cluster on any kind of infrastructure requires planning and whilst cloud hosts simplify this process, understanding how to translate your requirements into their paradigms requires research and experimentation.

Azure has several concepts that we needed to understand and make use of to get our cluster started:

- Resource Group: A 'container' that holds related resources of all types for an application.

- Storage Account: A data storage solution allocated to you that can contain Blob, Table, Queue, and File data. Can be stored on SSDs or HDDs.

- Virtual Network (vnet): A representation of a network in the cloud, where you can define DHCP address blocks, DNS settings, security policies, and routing.

- Subnet: Further segmentation for virtual networks.

- Virtual Machine (VM): Represents a particular operating system or application stack.

- Network Interface (NIC): Represents a network interface that can be associated to one or more virtual machines (VM).

- Network Security Group (NSG): Contains a list of rules that allow or deny network traffic to your VMs in a Virtual Network. Can be associated with subnets or individual VM instances within that subnet.

Azure Resource Limits

These are some platform specific limitations that influenced our architecture decisions:

- 8000 CPU cores per region

- 10000 VMs per region

- 100 VMs in single availability set

- 800 resources in single resource group

- 4096 IP addresses in a virtual network (VNet)

Azure Resource Manager Templates

The Azure platform makes it possible to deploy resources using Azure web interface, Azure CLI and Azure Resource Management Templates.

An ARM template is a json formatted file that provisions all the resources and commands for your application in a single operation. These templates are used in conjunction with the Azure command line tool to trigger a complex cluster deployment quickly.

An ARM template has a simple structure:

{

"$schema": "http://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "",

"parameters": { },

"variables": { },

"resources": [ ],

"outputs": { }

}

It's worth highlighting the resource dependency management in ARM templates, which provide a cohesive template structure and reliable deployment of resources.

Deployment Setup

Taking into account the limitations of the Azure platform, especially the number of resources per resource group and the number of virtual machines in a single availability set, we needed a convenient way for possible redeployment of resources. We decided to split the resources setup into logical self contained units defined by their function, these were:

- 1 Network infrastructure group

- 1 Docker Swarm managers group

- 1 Crate masters group

- 11 Scale unit groups

- 1 Ganglia monitoring group

Network Infrastructure

As a fundamental component of the overall deployment architecture, this resource group's units serve the network resource group. The network ARM template defines three deployable types of resources, they are:

- Virtual Network

- Network Security Group

- Public IP addresses used for Swarm Manager VMs

It's possible to reference resources by ID from other groups by providing its type and an explicit path to it:

resourceId ([resourceGroupName], resourceType, resourceName1, [resourceName2]...)For instance, we reference the virtual network from the network resource group in each ARM template like this:

...

"virtualNetworkName": "vnet1k1",

"resourceGroupNetworkName": "1k1network",

...

"vnetID": "[resourceId(variables('resourceGroupNetworkName'), 'Microsoft.Network/virtualNetworks'

, variables('virtualNetworkName'))]",

...

Docker Swarm managers

Docker Swarm managers and Consul agents run in the dedicated Docker Swarm managers group.

- 3 Network Interfaces

- 3 Virtual Machine

- 6 Microsoft Azure extensions

- 1 Availability set

- 1 Storage account

The Docker Swarm manager is responsible for the state of the entire Docker Swarm cluster. With a single manager the cluster becomes vulnerable to fallover. Therefore, we rely on the high availability characteristic of the Docker Swarm cluster by adding two manager replica instances.

Crate nodes

To have 1001 Crate nodes in the cluster, 11 scale unit groups must be deployed. To keep the template as simple as possible, while satisfying existing constraints, we settled on the following structure of the template:

- 100 Network Interfaces

- 100 Virtual Machine

- 200 Microsoft Azure extensions

- 1 Availability set

- 5 Storage accounts

This allowed us to proceed with the deployment of scale units iteratively or in parallel.

Crate Masters

The only difference between the resources in the scale and master units is the type of virtual machines and their number. So we used the scale unit ARM template with slightly modified configuration. We assumed that the Crate master nodes in a cluster with 1001 nodes might be overloaded with cluster state updates and chose a VM larger that the one Crate data nodes use.

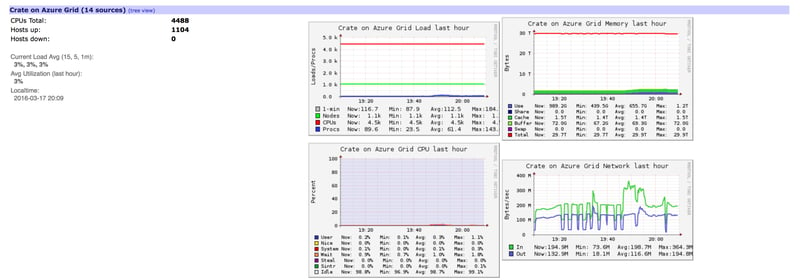

Ganglia monitoring

The final resource group is for Ganglia monitoring. Each scale and master unit had a dedicated VM assigned to it to collect and store aggregated metrics into a storage engine like RRD.

Deployment

The ARM templates are deployed using the Azure CLI. Before the template deployment a resource group in a certain location must be created.

azure group create -n <resourcegroup> -l <location>

azure group deployment create \

-f azuredeploy.json \

-e azuredeploy.parameters.json \

-g <resourcegroup>

How Big?

For those interested and keeping count, here's the total Azure VMs we used and how Azure describes them:

- 12x Ganglia VMs: Standard_D4_v2 (8 cores, 28GB RAM, 400GB local SSD)

- 3x Swarm Manager VMs: Standard_D14_v2 (16 cores, 112GB RAM, 800GB local SSD)

- 3x Crate Master VMs: Standard_D5_v2 (16 cores, 56GB RAM, 800GB local SSD)

- 1100x Crate Node VMs: Standard_D12_v2 (4 cores, 28GB RAM, 200GB local SSD)

That all adds up to a gigantic 4448 cores and 30968GB RAM, just for the Crate instances!

What was Learned

Creating a 1001 node Crate cluster was an experiment of epic proportions, designed to push Crate and Microsoft Azure to their limits.

We learnt more about how Crate behaves at vast scale, and was an opportunity for us to work alongside our partners at Microsoft and Docker to learn more about their products.

This will not be the last time we try an experiment like this. We will continue to push our technology to its limits, ensuring that no matter your application, data or cluster size.